Image Processing

Cooking up Faster Mobile Image Processing

- By Dian Schaffhauser

- 12/02/15

People who want to edit photos on their smartphone frequently rely on apps that send their images to a server somewhere to do the heavy lifting and send them back in modified form. Because images tend to be large, this process consumes data bandwidth and can be time-consuming for the upload and download. Another option is to manipulate images directly on the phone itself, but those apps tend to drain battery power.

A team of researchers from MIT, Stanford University and Adobe have come up with an alternative to both of those approaches: the use of a "transform recipe." When the user sends a raw photo as it has been captured by the phone camera, the server returns a greatly compressed version of the image along with a "recipe" to help the app reconstruct a "high-fidelity approximation." The system can reduce the bandwidth consumed by server-based image processing by 98.5 percent and power consumption by as much as 85 percent.

To save bandwidth while returning a file to the user with the phone camera, the researchers' system sends it as a low-quality JPEG, a common file format for digital images. Normally, the JPG sent to the server has a considerably lower resolution — around one percent of the original. So the system introduces "high-frequency" albeit meaningless noise" into the picture to increase its resolution. The existence of that noise prevents the program from relying too heavily on color consistency across parts of that that tiny image when calculating how to transform the picture.

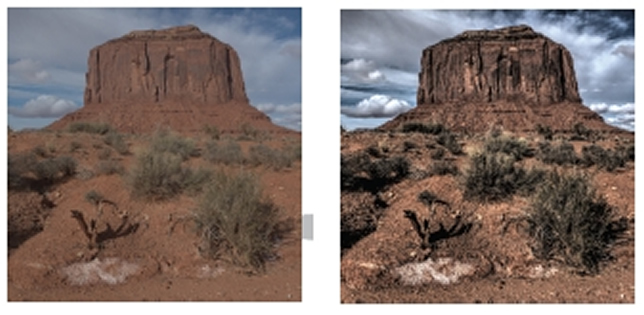

Then the program manipulates the image by sharpening edges, heightening contrast and adjusting colors, among other changes.

From there the software breaks the image into small pieces to lay out what the manipulation did for its results. That becomes the recipe for that portion of the image. Based on experimentation, the researchers found that the best results came when they used about 25 parameters — the directions for the recipe. The image is returned to the phone, and the app follows the recipe to perform the modifications it describes.

While that process does consume cycle times on the phone, the recipe approach takes only a fraction of what it would have taken doing the same manipulations on the phone or downloading a server-modified image in the traditional way. The researchers found that the overall energy savings were somewhere between 50 and 85 percent and the time savings between 50 and 70 percent.

"There are a lot of things that we're coming up with at Adobe Research that take a long time to run on the phone," said Geoffrey Oxholm, a research and innovation engineer at Adobe who was not involved in the project. "Or it's a big hassle to optimize them for every single mobile platform, so it's attractive to be able to optimize them really well on the server. It's almost like cheating: You get to use a big huge server and not pay as much to use it."

"Overall," the researchers concluded in their report, "the development of good recipes requires a trade-off between expressiveness, compactness and reconstruction cost. In some scenarios, enhancement-specific recipes might provide significant gains. We believe our framework provides a strong and general foundation on which to build future systems."

The research paper, which was recently presented at SIGGRAPH 2015, is available on the MIT Web site.

About the Author

Dian Schaffhauser is a former senior contributing editor for 1105 Media's education publications THE Journal, Campus Technology and Spaces4Learning.