STEM

Preschoolers Practice Programming with Help from MIT

- By Dian Schaffhauser

- 03/12/15

Can four-year-olds be taught programming concepts? A research project at MIT is examining just that question. The researchers in MIT's Media Lab are creating a system that lets children aged four to eight control a robot by showing it stickers they've placed on laminated sheets of paper.

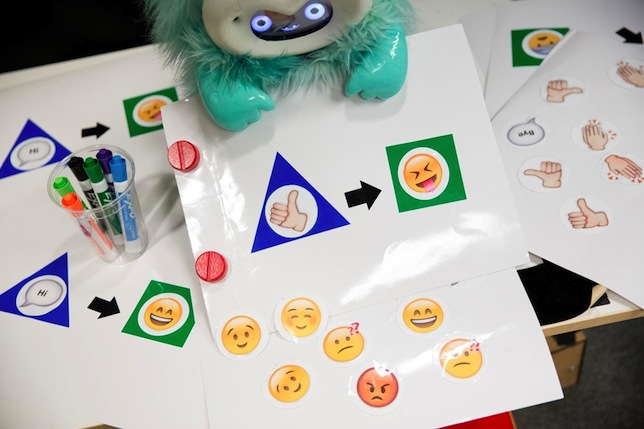

In a brief paper presented at the recent Association for Computing Machinery and Institute of Electrical and Electronics Engineers' International Conference on Human-Robot Interaction, the researchers described the Social Robot Toolkit. The toolkit works specifically with an interactive robot called Dragonbot, developed by the Personal Robots Group at the Media Lab. Dragonbot features audio and visual sensors, a speech synthesizer, multiple expressive gestures and a video screen for a face that can assume expressions.

The programming interface uses a set of reusable vinyl stickers that represent triggers and events. The child can place a sticker on a blue diamond for an action he or she performs, such as clapping hands, and a sticker on a green diamond for an action the robot takes, such as saying something or changing facial expression. A rule is created by placing a black arrow between the two events. The robot can be programmed with several rules, allowing the kids to try out sequences and "non-determinism," the researchers reported. The children could also draw their own verbal cues and responses onto blank stickers.

The pilot profiled in the paper consisted of teaching the children to use the toolkit and observing their abilities and questioning them on their comprehension before, during and after the interaction. To begin the instruction, a researcher would ask the child to place a single response sticker onto his or her sheet. The robot would execute that. Then they would move onto a program. When presented with a sequence, the robot would nod its head and say, "I've got it." Then it would execute the chain of command whenever it received the trigger.

"It's programming in the context of relational interactions with the robot," said Edith Ackermann, a developmental psychologist and visiting professor in the Personal Robots Group, who co-authored that paper. "This is what children do — they're learning about social relations. So taking this expression of computational principles to the social world is very appropriate."

In this first phase of the study, a researcher would manually enter the sequences set up by the children using a tablet computer with a touch screen showing all of the various icons. But the robotics group is developing a computer vision system that would enable the kids to convey new instructions to the robot by holding pages of stickers up to its camera.

One result that came out of the experiment was that children could articulate what programming was. As the researchers related in the paper, "We asked one 8 year old boy: 'How do robots decide what to do?' Before the session began, he answered, 'They are probably...wires and everything that make them do things and everything, electric gadgets and everything.' After the session with the toolkit, his answer changed to, 'That electric gadgets and everything make something inside of it get programed to what you say and what you do, so that the robot does what you want.'"

About the Author

Dian Schaffhauser is a former senior contributing editor for 1105 Media's education publications THE Journal, Campus Technology and Spaces4Learning.