Artificial Intelligence

UC Berkeley Develops 'Deep Learning' for Robot

- By Dian Schaffhauser

- 05/21/15

The same sophisticated approach to pattern matching that Siri and Cortana use to respond to questions more accurately is now helping a robot

at the University of California Berkeley learn how to screw caps on bottles and rack up

a hanger with an ever-growing repertoire of dexterity. Rather than programming each type of task, the researchers there are relying on

algorithms that direct the robot to use trial and error, learning as it goes, more closely resembling the way humans learn.

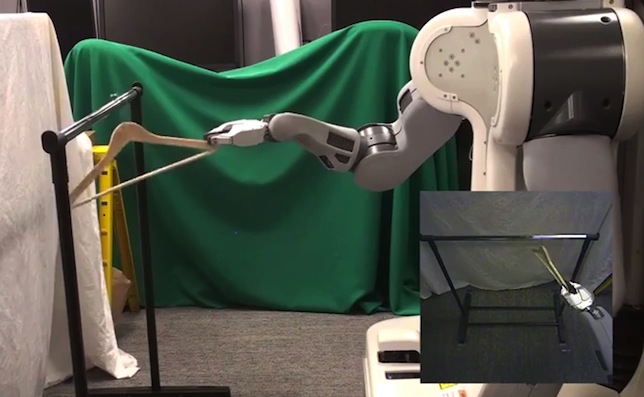

Among the activities already mastered by "BRETT" (Berkeley Robot

for the Elimination of Tedious Tasks) are assembling a toy plane, fitting the claw of a toy hammer under a nail with various grasps and

figuring out where the square peg belongs. BRETT is actually a

Personal Robot 2 (PR2) produced by Willow Garage.

"What we're reporting on here is a new approach to empowering a robot to learn," said Pieter Abbeel, a professor in the university's

department of Electrical Engineering & Computer Sciences, in a prepared

statement. "The key is that when a robot is faced with something new, we won't have to reprogram it. The exact same software, which encodes how

the robot can learn, was used to allow the robot to learn all the different tasks we gave it."

Most robotic applications work in a controlled environment "where objects are in predictable positions," added Trevor Darrell, co-researcher

and director of the Berkeley Vision and Learning Center. "The challenge of putting

robots into real-life settings, like homes or offices, is that those environments are constantly changing. The robot must be able to perceive

and adapt to its surroundings."

The project turned to an area of artificial intelligence referred to as "deep learning," which is loosely inspired by how the human brain

perceives and interacts with the world through ever-changing neural circuitry. The robot includes a "reward" function that provides a score

based on how well it performs a given task. Like a game of "hot and cold," motions that get the robot further along in the task earn a higher

score, which feeds back into the neural net so the robot can "learn" which movements are the best ones for the activity.

The robot has the capacity of tracking the connections among 92,000 parameters vs. about 100 billion neurons in the human brain.

According to the researchers, given the relevant coordinates for the start and end of a task, BRETT could master a typical assignment in

about 10 minutes. When the robot didn't know where objects were located and had to learn those coordinates as well, the learning process could

take about three hours.

With more data, said Abbeel, the robot can learn more complex operations. "We still have a long way to go before our robots can learn to

clean a house or sort laundry, but our initial results indicate that these kinds of deep learning techniques can have a transformative effect

in terms of enabling robots to learn complex tasks entirely from scratch. In the next five to 10 years, we may see significant advances in

robot learning capabilities through this line of work."

The research is coming out of a new People and Robots Initiative

at introduced at the University of California's Center for Information Technology Research in

the Interest of Society (CITRIS). CITRIS is focused on projects researching how people and machines can work together. The research team

will present a poster session on its findings at the International Conference on Robotics and

Automation (ICRA), taking place at the end of the month in Seattle.

The work is being funded in part by the Defense Advanced Research Projects

Agency, the Office of Naval Research, the

United States Army Research Laboratory and the

National Science Foundation.

About the Author

Dian Schaffhauser is a former senior contributing editor for 1105 Media's education publications THE Journal, Campus Technology and Spaces4Learning.