Research: Ordinary People Turn into Trolls Too

- By Dian Schaffhauser

- 06/12/17

Image: Twitter.

Online trolls are made, not born. Oftentimes, their origins come from how a person is feeling at the moment and whether others are displaying troll-like behavior too. Those are the conclusions of a research project at Cornell and Stanford that examined antisocial behavior in online communities.

Trolls, according to "Anyone Can Become a Troll: Causes of Trolling Behavior in Online Discussions," are people who post disruptive messages in Internet discussions intended to upset others. While previous research has suggested that trolling behaviors are "confined to a vocal and antisocial minority," or sociopaths, the Cornell work has concluded that "ordinary people can engage in such behavior as well." The researchers figured that out by causing it to happen in an online experiment.

They recruited people through Amazon's Mechanical Turk service to participate in a discussion group about current events. First, participants took a five-minute quiz with 15 open-ended questions involving logic, math and words. Participants were divided into two groups — one of which received questions that were tougher to answer in the time allotted. At the end of the quiz, participants received automatic scores and were told whether they performed better or worse than average. Those in the "positive" group were told they performed better than average; those in the "negative" group were told the opposite. The goal of the quiz was to see if participants' moods prior to participating in a discussion influenced subsequent trolling behaviors.

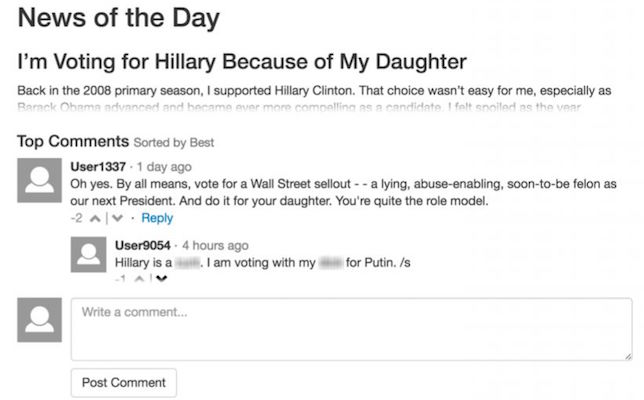

Then the participants were asked to complete 65 Likert-scale questions about how they were feeling. After that, they were told to read a short article and to take part in an online discussion to help the researchers test a comment-ranking algorithm. The article argued that women should vote for Hillary Clinton instead of Bernie Sanders in the Democratic primaries leading up to the presidential elections, to which comments had already been appended. Those in the positive group would read more innocuous comments; those in the negative group were exposed to troll posts, such as: "Oh yes. By all means, vote for a Wall Street sellout — a lying, abuse-enabling, soon-to-be felon as our next President. And do it for your daughter. You’re quite the role model." All of the existing comments came from those added to the original article and other forums where the same topic was being discussed.

The outcome showed that negative mood and bad examples could lead to offensive posts. Those who were in the negative group and in a negative mood were nearly twice as likely as participants in a positive group and in a positive mood to contribute a troll post (68 percent vs. 35 percent).

The paper was recognized earlier this year during the 20th ACM Conference on Computer-Supported Cooperative Work and Social Computing.

For a separate but related study, the same researchers also analyzed 16 million posts on CNN.com, particularly those flagged by moderators, and used computer text analysis and human review of samples to confirm that they qualified as trolling. They found that as the number of flagged posts among the first four posts in a discussion increased, so did the probability that a fifth post would also be flagged. Even if only one of the first few posts was flagged, the fifth post was more likely to be flagged as well. Those results were described in, "Antisocial Behavior in Online Discussion Communities," which was delivered at an AAAI International Conference on Web and Social Media in 2015.

The research team has proposed adding "troll reduction" into the design of discussion groups. For example, a "situational troller" who has been involved in a heated discussion in the very near past could be given a time out from additional postings until he or she has cooled off. Likewise, moderators could remove troll posts to reduce "contagion."

"Rather than banning all users who troll and violate community norms, also considering measures that mitigate the situational factors that lead to trolling may better reflect the reality of how trolling occurs," the researchers concluded.

About the Author

Dian Schaffhauser is a former senior contributing editor for 1105 Media's education publications THE Journal, Campus Technology and Spaces4Learning.