From Digital Native to AI-Empowered: Learning in the Age of Artificial Intelligence

The upcoming generation of learners will enter higher education empowered by AI. How can institutions best serve these learners and prepare them for the workplace of the future?

The artificial intelligence revolution is upon us, marking a significant leap for the future of both work and education. Unlike past technological surges, this one resonates similarly to the widespread adoption of the Web in the 90s. And as someone deeply involved in the nexus of learning and technology with a focus on learning experience design (LxD), I see key similarities and differentiators as AI emerges.

Perhaps most striking is that AI adoption by the general public is advancing at a pace surpassing that of its digital forebear. The progression is notably more rapid; the adoption more ubiquitous; and the potential reach near limitless. AI innovations emerge within days, in contrast to the months-long development cycles of early 2000s Web applications. This pace prompts reflection: How will this shape the upcoming generation of learners? What will their work landscape look like? Which skills will they need to cultivate? Furthermore, how can we address the potential challenges of an AI-driven divide to ensure equitable access for all? The time to reflect on these questions is today, not tomorrow.

Digital Native vs. AI-Empowered

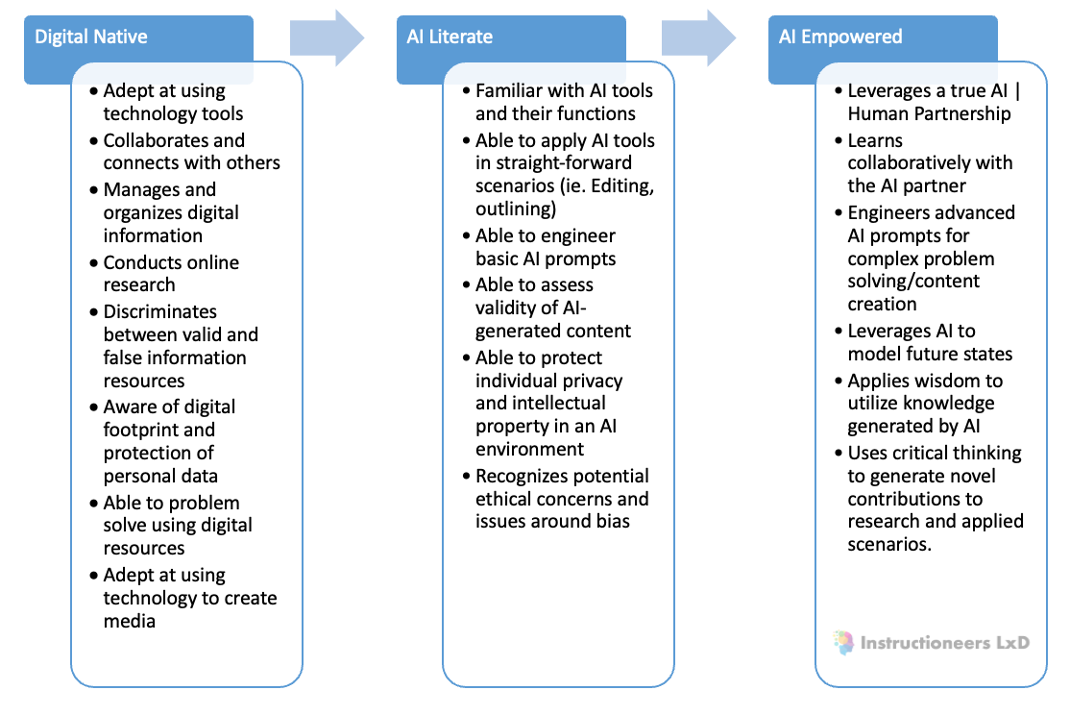

Years ago, the phrases "digital native" and "digital immigrant" were introduced as conceptual frameworks to understand generational technology proficiency. The analogy drew parallels to how children raised bilingual from birth typically exhibit more ease and fluency than those who learn a second language in adulthood. However, these terms are problematic due to over-generalization and potential cultural appropriation implications. Issues also arose around a digital divide that have not been completely resolved, and which AI may exacerbate. By the 2000s, educators expected learners to demonstrate proficiency in multitasking across various digital platforms, digital literacy, and adaptability to new tools. Over time, several of these assumptions were determined to be inaccurate. In my experience with undergraduate students, these learners responded positively when technology was integrated into guided and active learning environments. They learned through collaboration, connection, and curation. How can we enhance active, engaged learning in the age of AI?

Dr. Chris Dede, of Harvard University and Co-PI of the National AI Institute for Adult Learning and Online Education, spoke about the differences between knowledge and wisdom in AI-human interactions in a keynote address at the 2022 Empowering Learners for the Age of AI conference. He drew a parallel between Star Trek: The Next Generation characters Data and Picard during complex problem-solving: While Data offers the knowledge and information, Captain Picard offers the wisdom and context from on a leadership mantle, and determines its relevance, timing, and application.

The forthcoming generation of AI-empowered learners will need a profound understanding of the nuances between knowledge and wisdom. They will need to demonstrate stronger abilities in critical thinking and handling ethical challenges, all the while appreciating the essence of human experiences. Using AI tools, learners will bear the responsibility of ensuring fairness, non-bias, and societal welfare.

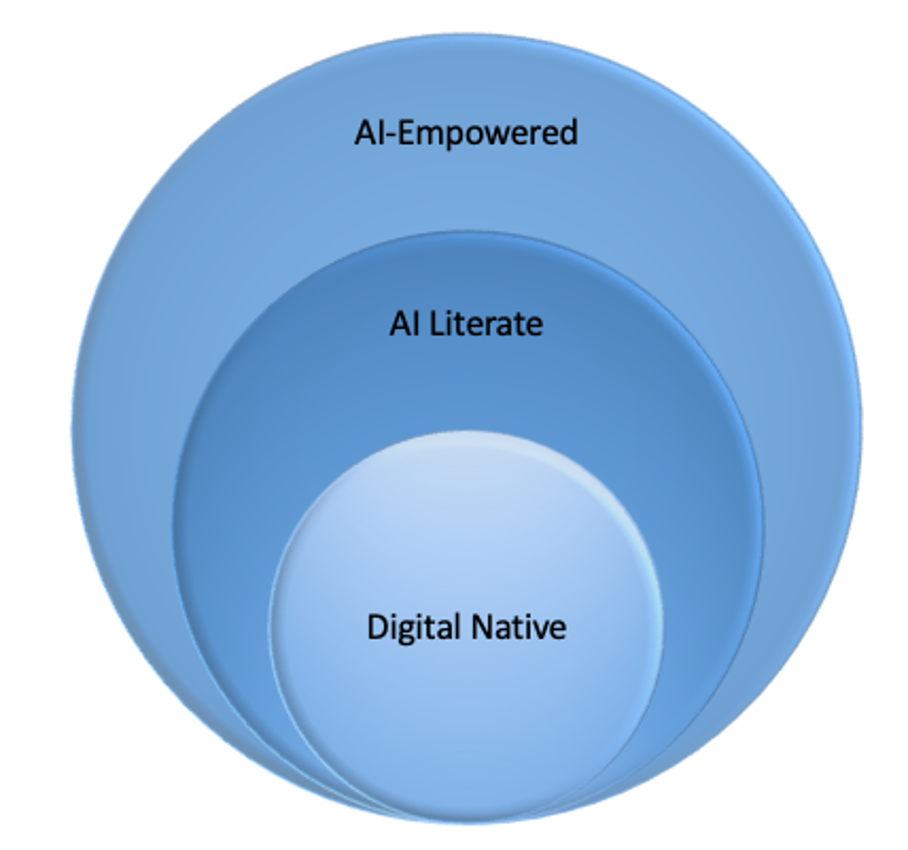

Digital native skills will continue to be necessary. In addition, learners will need to stack AI literacy skills on top of those to succeed in the workplace of tomorrow. These AI literacy skills must be mapped into their curriculum from K-12 through higher education, as AI literacy may eventually become as critical to learners as reading and writing. To further combat the digital divide, educators will need to increasingly advocate for initiatives that promote digital equity, ensuring both access and adaptability. Ultimately, true AI empowerment will come as learners bridge from AI literacy skill sets to the human-AI partnership model.

Keeping the Learner at the Center and Top of Mind

Advances in learning design and technology have the potential to reduce unnecessary friction in the learning experience thereby improving learning outcomes. LxD tenets place learner empathy at the center of the design process, borrowing heavily on advances in cognitive science, online learning research, and product development. The adaptive learning environment, long discussed as a "North Star" for increasing equity and opportunity, is conceptualized to guide learners toward attaining new competencies by adjusting to learning preferences seamlessly. AI can be embedded in adaptive learning platforms, providing features like intelligent pedagogical assistants, just-in-time tutoring, and even study-group formation.

In an ideal scenario, after being immersed in a digital equity environment throughout their education, the upcoming generation of learners will enter higher education empowered by AI. Adaptive learning platforms will be fully developed, and LxD strategies will be prevalent in most institutions, streamlining the learning process. Educators will establish strong AI competencies, as well as teach skills that AI can't replicate for professionals in specific fields. Moreover, learners will be acutely aware of the importance of independent thought, understanding that they should never fully surrender their cognitive autonomy. Such learners would carry with them remarkable critical thinking capabilities, ethical judgment, and interdisciplinary insights, thereby propelling innovation. We aren't there yet, but this can and should be our goal for creating learner-centered environments in the age of AI.

This vision captures the promise of AI when synergized with other pivotal advancements. Yet, numerous steps remain to realize this potential:

- Higher education institutions should emphasize AI literacy and empowerment for their staff and faculty, ensuring they model best practices, protect sensitive institutional data, and collaborate effectively with students.

- Scholars must identify AI's contributions within their specific fields, to prepare learners for relevant jobs.

- Professors should become adept at considering AI when designing assessments.

- Higher education technology organizations will be under increased pressure to develop new AI-focused data protection and privacy approaches, particularly as efforts to compromise AI tools increase. "Walled garden" solutions that keep institutional data safe internally while integrating external data offer the best advantages.

- Leaders in higher education will tread a complex policy environment, weighing the benefits against risks related to data and intellectual property rights.

As our understanding of AI evolves, the potential for synergistic human-AI collaboration will pave the way for next-generation innovators, influencing the future of work. Keep in mind, AI has been anticipated to impact knowledge workers in sectors like law and marketing. However, it is now impacting professions once considered "safe." In my roles as an advocate, consultant, and professor of learning experience design and organizational leadership, I'm committed to promoting the cooperative spirit exemplified by Captain Picard and Data. Taking a cue from Captain Picard, this vision is imperative to prepare the next generation, or "Gen AI," to "make it so."

Note: OpenAI's GPT-4 provided editing support during this article's drafting process.