Survey: 86% of Students Already Use AI in Their Studies

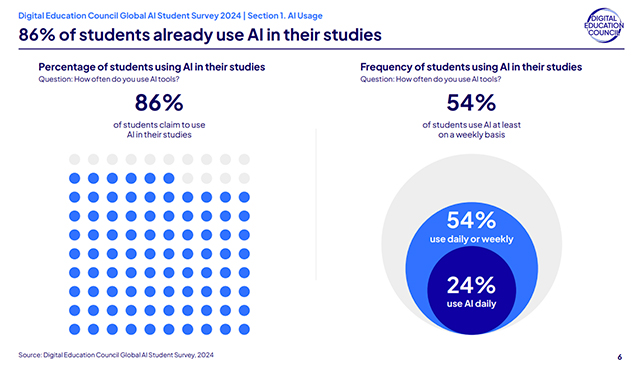

In a recent survey from the Digital Education Council, a global alliance of universities and industry representatives focused on education innovation, the majority of students (86%) said they use artificial intelligence in their studies. And they are using it regularly: Twenty-four percent reported using AI daily; 54% daily or weekly; and 54% on at least a weekly basis.

Courtesy of Digital Education Council

For its 2024 Global AI Student Survey, the Digital Education Council gathered 3,839 responses from bachelor, masters, and doctorate students across 16 countries. The students represented multiple fields of study.

On average, surveyed students use 2.1 AI tools for their courses. ChatGPT remains the most common tool used, cited by 66% of respondents, followed by Grammarly and Microsoft Copilot (each 25%). The most common use cases:

- Search for information (69%);

- Check grammar (42%);

- Summarize documents (33%);

- Paraphrase a document (28%); and

- Create a first draft (24%).

Despite their wide use of AI tools, students were not confident about their AI literacy, the survey found. Fifty-eight percent of students reporting feeling that they do not have sufficient AI knowledge and skills, and 48% felt inadequately prepared for an AI-enabled workforce. Notably, 80% of surveyed students said their university's integration of AI tools (whether that be integration into teaching and learning, student and faculty training, course topics, or other areas) does not fully meet their expectations.

Students' top AI expectations included:

- Universities should provide training for both faculty and students on the effective use of AI tools (cited by 73% and 72% of respondents, respectively);

- Universities should offer more courses on AI literacy (72%);

- Universities should involve students in the decision-making process regarding which AI tools are implemented (71%); and

- Universities should increase the use of AI in teaching and learning (59%).

"The rise in AI usage forces institutions to see AI as core infrastructure rather than a tool," commented Alessandro Di Lullo, CEO of the Digital Education Council and Academic Fellow in AI Governance at The University of Hong Kong. At the same time, he said, "universities need to consider how to effectively boost AI literacy to equip both students and academics with the skills to succeed in an AI-driven world."

The full report is available here on the Digital Education Council site.

About the Author

Rhea Kelly is editor in chief for Campus Technology, THE Journal, and Spaces4Learning. She can be reached at [email protected].