Report Makes Business Case for Responsible AI

A new report commissioned by Microsoft and published last month by research firm IDC notes that 91% of organizations use AI tech and expect more than a 24% improvement in customer experience, business resilience, sustainability, and operational efficiency due to AI in 2024.

"In addition, organizations that use responsible AI solutions reported benefits such as improved data privacy, enhanced customer experience, confident business decisions, and strengthened brand reputation and trust," said Microsoft exec Sarah Bird in a blog post. "These solutions are built with tools and methodologies to identify, assess, and mitigate potential risks throughout their development and deployment."

The report, titled "From Risk to Reward: The Business Case for Responsible AI," outlines the following foundational elements of a responsible organization:

- Core values and governance: It defines and articulates responsible AI (RAI) mission and principles, supported by the C-suite, while establishing a clear governance structure across the organization that builds confidence and trust in AI technologies.

- Risk management and compliance: It strengthens compliance with stated principles and current laws and regulations while monitoring future ones and develops policies to mitigate risk and operationalize those policies through a risk management framework with regular reporting and monitoring.

- Technologies: It uses tools and techniques to support principles such as fairness, explainability, robustness, accountability, and privacy and builds these into AI systems and platforms.

- Workforce: It empowers leadership to elevate RAI as a critical business imperative and provides all employees with training to give them a clear understanding of responsible AI principles and how to translate these into actions. Training the broader workforce is paramount for ensuring RAI adoption.

A few Microsoft-provided highlights of the report, meanwhile, include:

- More than 30% of respondents noted that the lack of governance and risk management solutions is the top barrier to adopting and scaling AI.

[Click on image for larger view.] Top Barriers to AI Adoption (source: IDC).

[Click on image for larger view.] Top Barriers to AI Adoption (source: IDC).

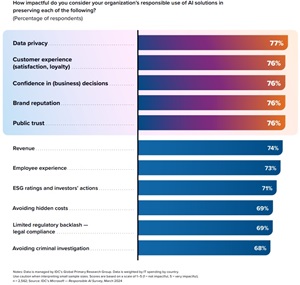

- More than 75% of respondents who use responsible AI solutions reported improvements in data privacy, customer experience, confident business decisions, brand reputation, and trust.

[Click on image for larger view.] Level of Impact of Organization's Responsible Use of AI Solutions (source: IDC).

[Click on image for larger view.] Level of Impact of Organization's Responsible Use of AI Solutions (source: IDC).

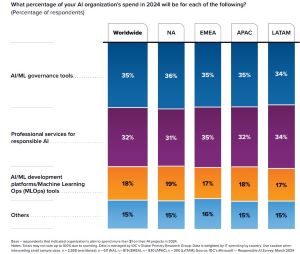

- Organizations are increasingly investing in AI and machine learning governance tools and professional services for responsible AI, with 35% of AI organization spend in 2024 allocated to AI and machine learning governance tools and 32% to professional services.

[Click on image for larger view.] AI Organization's Budget Allocation, 2024 (source: IDC).

[Click on image for larger view.] AI Organization's Budget Allocation, 2024 (source: IDC).

The report also provides advice to organizations seeking to implement responsible AI. They should establish clear principles guiding AI development, avoid reinforcing unfair biases, and prioritize safety by testing systems in controlled environments and monitoring post-deployment. They should form diverse AI Governance Committees to oversee responsible use, align internal and external policies with legal and ethical standards, and promote transparency and explainability. Regular AI audits, privacy protection, and diverse testing criteria are essential, alongside ongoing employee training in responsible AI practices. Organizations should adopt end-to-end governance, encompassing infrastructure, model, application, and end-user layers, to address risks and compliance. Adapting to regulations like the EU AI Act, which imposes strict requirements for high-risk applications, is crucial. Enterprises should also enhance governance over generative AI systems by controlling user interactions, adding safeguards, and fostering safe exploration to balance risk mitigation with the benefits of AI tools.

Microsoft offered the following guidance:

- Establish AI principles: Commit to developing technology responsibly and establish specific application areas that will not be pursued. Avoid creating or reinforcing unfair bias and build and test for safety.

- Implement AI governance: Establish an AI governance committee with diverse and inclusive representation. Define policies for governing internal and external AI use, promote transparency and explainability, and conduct regular AI audits.

- Prioritize privacy and security: Reinforce privacy and data protection measures in AI operations to safeguard against unauthorized data access and ensure user trust.

- Invest in AI training: Allocate resources for regular training and workshops on responsible AI practices for the entire workforce, including executive leadership.

- Stay abreast of global AI regulations: Keep up-to-date with global AI regulations, such as the EU AI Act, and ensure compliance with emerging requirements.

"By shifting from a reactive AI compliance strategy to the proactive development of mature responsible AI capabilities, organizations will have the foundations in place to adapt as new regulations and guidance emerge," the report said in conclusion. "This way, businesses can focus more on performance and competitive advantage and deliver business value with social and moral responsibility."

The report used survey data from March 2024.

More information can be found in an accompanying webinar.

Read the full report here.

About the Author

David Ramel is an editor and writer at Converge 360.