Data & Analytics

U Wisconsin-Madison Project Tackles Big Data Question in Astronomy

- By Dian Schaffhauser

- 04/21/15

An astronomy project at the University of Wisconsin-Madison has made inroads on two

questions: how to track neutral hydrogen in the "distant" universe and how to scale up the capacity to maintain and manage the data generated

through such work.

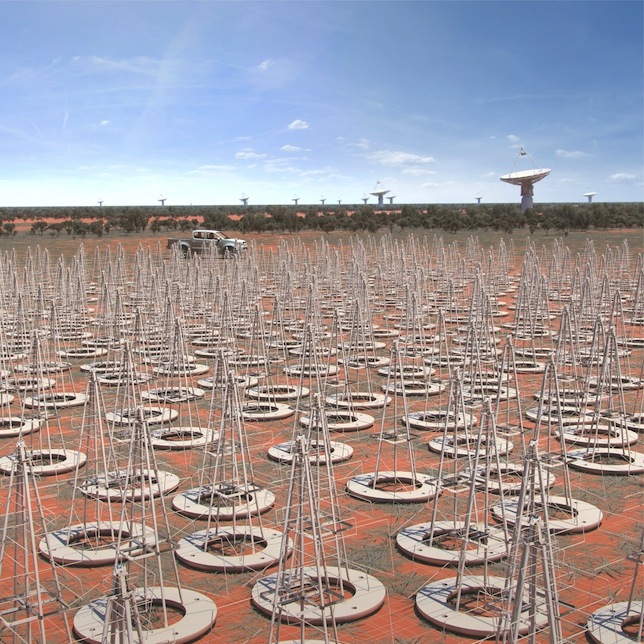

The latter can't be underestimated. An international effort to build the world's largest radio telescope, the

Square Kilometre Array (SKA), will generate unfathomable amounts of data from its

locations in Africa and Asia. The dishes of SKA, for instance, will produce 10 times the global Internet traffic; the data collected in a

single day would take nearly 2 million years to play back on an iPod.

The scientists at U Wisconsin have developed a new algorithm,

"Autonomous Gaussian Decomposition

(AGD)," which has proven to produce counting results comparable to human-derived approaches, but with the added advantage of being able to

keep up with very large data volumes. According to the researchers, AGD uses derivative spectroscopy and machine learning to provide optimized

guesses for the number of Gaussian components in the data, as well as their locations, widths and amplitudes.

As astronomers prepare for the launch of SKA, which is expected to be operational in the mid-2020s, "there are all these discussions about

what we are going to do with the data," said Robert Lindner, who performed the research as a postdoctoral fellow in astronomy and now works as

a data scientist in the private sector. "We don't have enough servers to store the data. We don't even have enough electricity to power the

servers. And nobody has a clear idea how to process this tidal wave of data so we can make sense out of it."

The hydrogen data collected through the SKA will come in the form of units or pixels, behind which will be the information about the

hydrogen that exists within any single dot of sky. Extracting meaning from a single pixel can take a human "20 to 30 minutes" of concentrated

scrutiny. That approach can't keep up when the SKA data streams are producing millions of pixels.

Lindner and his former colleagues developed a computational approach that solves the hydrogen location problem with just a slice of

computer time. The researchers developed software that could be trained to interpret the "how many clouds behind the pixel?" problem. That

software ran on a high-capacity computer network at UW-Madison's campus computing center, the Center for High Throughput Computing.

For the sake of comparison, a graduate student provided "the hand-analysis." The results show that as the data deluge begins pouring in,

the new algorithm is accurate enough to replace manual processing.

The goal is to explore the formation of stars and galaxies, Lindner explained. "We're trying to understand the initial conditions of star

formation — how, where, when do they start? How do you know a star is going to form here and not there?"

With automated data processing, "suddenly we are not time-limited," Lindner added. "Let's take the whole survey from SKA. Even if each

pixel is not quite as precise, maybe, as a human calculation, we can do a thousand or a million times more pixels, and so that averages out in

our favor."

About the Author

Dian Schaffhauser is a former senior contributing editor for 1105 Media's education publications THE Journal, Campus Technology and Spaces4Learning.