A Better Way to Find the Right OER

By tapping into a free Web service that turns learning objectives into search terms, a DeVry professor is helping instructors identify the best open educational resources for their courses.

- By Dian Schaffhauser

- 09/02/15

For a lot of educators who want to use open educational resources (OER), the challenge is finding the "good stuff." Useful lessons, textbooks and whole courses may be freely available online, but pinpointing the ones that can really help students with a particular learning objective can become a major hurdle to their adoption.

Russ Walker is an advocate for OER usage at DeVry University Long Beach as a professor in the College of Business & Management. He especially likes OER for supplemental materials. "Very often a textbook or other resources that are built into the course don't quite hit a learning objective right on the head," he noted. Students often come at the content with their own perspectives, interests and backgrounds, and they may need to learn a concept "in another way or from another viewpoint," he said, or they may just need a review on a topic they haven't worked with in a long time. So Walker does what he can to "provide a variety of resources, including OER."

Gradually, he realized that he was spending too much time "discovering and evaluating" OER. "We've really moved from the problem of scarcity to the problem of abundance," he said. "The major challenge in most cases is, how do you find the good stuff out there when it's scattered in many different repositories and it's not always indexed in the same way?"

Building an OER Assistant

A problem with current OER repositories, such as MERLOT II (with 62,204 possible results), OER Commons (with 44,327 post-secondary results) and OpenStax CNX (with 1,545 books), is that they lack "a good, consistent way of reviewing and rating," Walker said. "Most of the materials out there still are not reviewed [or] rated in a meaningful, helpful way."

As a former software developer, Walker decided to build a system that could "semi-automate" the selection of OER for himself and his colleagues. The results turned into his PhD dissertation, tested by some 50-plus faculty members within the DeVry university system. Now although "OER Assistant" is used only by Walker himself and "a few other colleagues on the Long Beach campus," the basics of the project are worth considering for any institution struggling to gain traction with OER usage.

Searching by Learning Objective

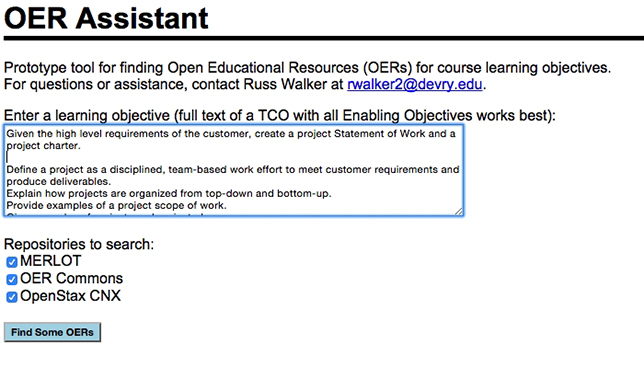

The OER Assistant works like this: An educator copies and pastes a learning objective from a particular course into a simple form. The text of the objective is automatically fed into a Web service that extracts key phrases and uses them as search terms in several OER repositories, and the results are displayed in a ranked order.

OER Assistant takes the text of a learning objective and allows the users to specify which repository to search.

Walker experimented with a number of keyword phrase extractors to use in the OER Assistant, and settled on a Web-based extraction system from Alchemy API, an IBM company. Alchemy's can be tested online, and it's also available as a free AlchemyAPI Key that's limited to a thousand daily transactions and can be added by developers to their data apps.

"It's pretty amazing what modern machine learning technology can do as far as parsing a chunk of text into meaningful phrases," Walker observed. "We're not terribly good at coming up with search terms. But machines can do those things for us. If you then bring some possibilities right under our nose, it's a little easier for us to say, 'Oh, yeah, that looks good, and that looks good.'"

Filtering Results

The results offered by OER Assistant still need to be evaluated by the instructor to figure out which ones to recommend to students. But just that layer of filtering, Walker noted, is enough to "really smooth the process and make it much easier for people to take advantage of what's already out there."

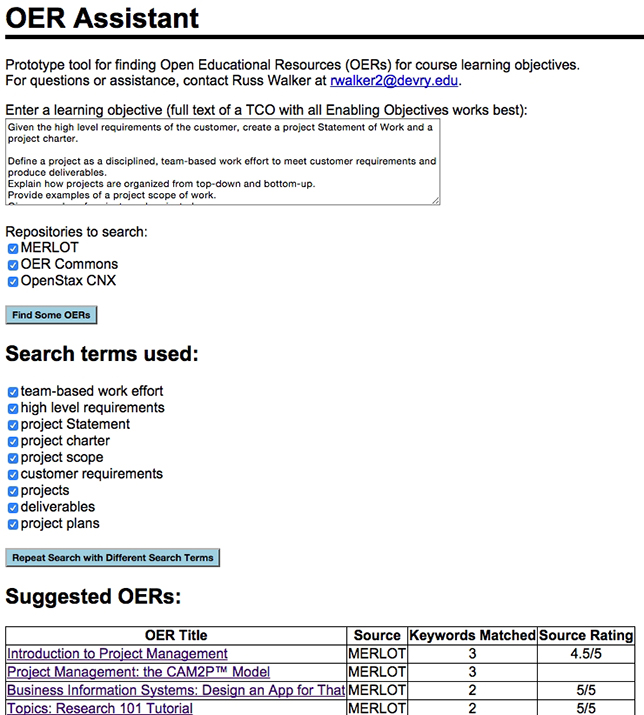

Search results are ranked according to how well they match the phrases extracted from the learning objective.

A user might end up with a hundred results. From there it can be a bit of a slog to "plow through," he admitted, but "if you find something you can use in the top 10, then fantastic, there's no real reason to look at the others."

The advantage is that Walker's algorithm for ranking the results isn't nearly as secretive as Google's. If a result has rankings from the source OER repository, it gets ranked higher. And if it hits on multiple key phrases extracted from the learning objective, that pushes it higher in the list too.

The approach taken by Walker is something he believes others could replicate. "I've just pulled together some available resources and put them to a particular task," he explained. "I think this is something that many folks could easily recreate on their own. Maybe with their own particular tweak to how it displays the results or what repositories are searched and so on."

His advice: "Wire some things together and get started helping people find resources."