Google Translate Update Reduces Errors Up to 85 Percent

Source: Google Research Blog.

Students who have taken or are taking foreign language courses and have used Google Translate may be familiar with the language conversion tool’s less than perfect translations. Yesterday, Google launched an updated system that utilizes state-of-the-art techniques to reduce translation errors by approximately 55-85 percent.

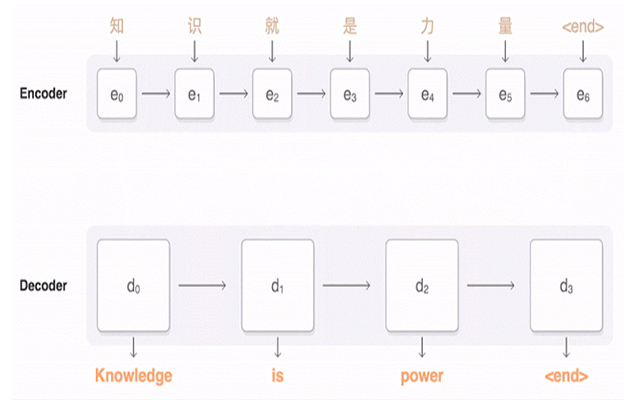

The newly launched Google Neural Machine Translation (GNMT) system delivers an “end-to-end approach for automated translation, with the potential to overcome many of the weaknesses of conventional phrase-based translation systems,” according to the GNMT technical report. Neural machine translation (NMT) systems, in brief, work because they consider the entire input sentence as a unit for translation. Unlike other NMT systems, however, which sometimes have trouble with rare words, GMNT provides a more accurate and speedy translation. The technology was deployed with help from TensorFlow, an open source machine learning toolkit, and Tensor Processing Units.

“Using human-rated side-by-side comparison as a metric, the GNMT system produces translations that are vastly improved compared to the previous phrase-based production system,” according to the Google Research Blog post announcing the update. “GNMT reduces translation errors by more than 55 percent-85 percent on several major language pairs measured on sampled sentences from Wikipedia and news websites with the help of bilingual human raters.”

For now, Google Translate will use the GNMT system for Chinese to English conversions only – assisting roughly 18 million translations per day worldwide – but the company plans to roll out GMNT to “many more” languages in the coming months.

To learn more, the full technical report is available on the Google Research Blog site.

About the Author

Sri Ravipati is Web producer for THE Journal and Campus Technology. She can be reached at [email protected].