How to Set Up a VR Pilot

As Washington & Lee University has found, there is no best approach for introducing virtual reality into your classrooms — just stages of faculty commitment.

- By Dian Schaffhauser

- 03/06/18

The headset goes on and the student is handed two controllers. She begins to manipulate a virtual model of a protein, turning it this way and that to study the structure. It's not exactly like playing in Star Trek: Bridge Crew, but it's still way better than looking at a flat illustration in a textbook, which is exactly why Washington & Lee University's Integrative and Quantitative (IQ) Center is trying out the use of virtual reality in as many classes as it can. Even though it may feel like VR has been around for a long time (Oculus Rift began taking pre-orders in 2012), in education its use is still on the bleeding edge.

The work at the IQ Center offers a model for how other institutions might want to approach their own VR experimentation. The secret to success, suggested IQ Center Coordinator David Pfaff, "is to not be afraid to develop your own stuff" — in other words, diving right in. But first, there's dipping a toe.

Virtual reality can be an isolating experience, as only one person can be in it at a time.

The IQ Center is a collaborative workspace housed in the science building but providing services to "departments all over campus," said Pfaff. The facilities include three labs: one loaded with high-performance workstations, another decked out for 3D visualization and a third packed with physical/mechanical equipment, including 3D printers, a laser cutter and a motion-capture system.

Each of those labs also includes virtual reality gear (specifically a lone HTC Vive headset). As Pfaff put it, VR is "an isolating experience. Only one person can be in it at a time. It's kind of hard to figure how this can fit into a classroom."

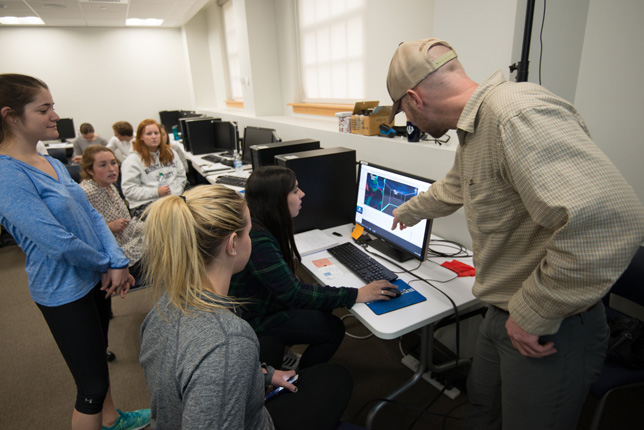

One of the IQ Center's three VR labs

Three Stages of Progress

Pfaff described three forms of VR work the center has accomplished so far.

First, there's the classroom demo, which works "on a small scale." Students come in and get a demonstration of the VR system to understand how the technology works. For example, he noted, a real estate class used the demo to learn how people sell properties using VR for buildings that haven't been built yet. The center has also worked with an ecology class and an English class (which was reading a book that involved VR).

The second use for VR is to have students build something themselves. "This can be a little complicated," said Pfaff. "But if you do enough setup ahead of time, you can make a workflow that students can handle in a short amount of time."

Students can manipulate biological models in VR to study their structure.

For instance, when the cellular biology class used VR to study the proteins that make up the internal structure of cells, students could have been shuffled into the 3D stereo lab to view the images, like watching the 3D version of a movie but without a plot. In place of that, the center worked with the faculty member to develop a workflow that would allow the students to download protein structures from the Protein Data Bank, a publicly available protein database, and then adapt them with Maxon Cinema 4D, a program that preps the files for use in VR. (Other programs that do a similar job, according to Pfaff, are 3DS Max and Maya from Autodesk and the open source Blender.) From there, the students put the files into a virtual world created in Unity, a game engine used for a lot of VR development work.

By the end of the four-hour lab, students could put on the Vive headset, enter the virtual world and pick up and manipulate their proteins using the hand controllers. "They were able to hold their protein in their hands and see a really good rendering of it," said Pfaff. But more importantly, he added, they also learned how to access protein data from the data bank and handle other activities they "needed to know anyway for all kinds of biology classes."

Another version of that kind of work was set up for a class on the molecular mechanics of life. The instructor gave his students an option for their final projects of creating complete VR environments with a learning component, in which cues respond to student input for biochemical reactions. "It's like a full-blown little educational module inside VR that anybody can go into and interact with," Pfaff explained. "So this could be used later on for other classes as well."

As part of those projects, the participants are learning how to program for VR. "It's not a lot of hardcore programming," Pfaff emphasized. "You can get around pretty well without writing too much code." To speed up the work, the center has adopted various tools, including the Virtual Reality Toolkit (VRTK), a collection of scripts and "concepts" that help people build VR applications quickly in Unity. "VRTK takes care of a lot of the heavy lifting," he said. "You can launch a Unity project, put in VRTK, and then in just a couple of clicks, you can have a world that you can move around [in] inside of VR. And then you drag in assets that you create outside, like the protein structures."

As part of their work in the IQ Center, students are learning how to program for VR.

The third approach being tested out in the center is perhaps the most ambitious one: creating assignments that exist in VR.

Paul Low, a geologist and researcher for the university who has been the lead developer on many of the center's VR projects, has created a lesson to introduce new geology students to the crystal structures of minerals. According to Pfaff, Low developed a 10-step virtual experience for students. First, they get a short session on how to access the computer and pull up the program. Next, they learn how to use tools within the virtual world for grabbing objects and moving around in the space. Then they start the assignment, which is driven by voice commands. Students grab virtual objects and move them from one place to another, as the voice directs them; then they might drag a plane through a crystal to demonstrate their understanding of cleavage planes and mineral structures. "At each step it asks you a couple of questions to make sure you know what you're talking about, then it will take you on to the next step," he said.

Because the assignment takes about 30 minutes to work through, students make reservations to come in and do the lesson on their own at the center outside of class time.

Getting Started

Getting started with VR doesn't require a lot of equipment, Pfaff insisted — at least not compared to the 3D projection system in the center's main classroom space. That ran in the "six figures," he said. A Vive headset, on the other hand, is about $600.

On top of that, "you need a pretty decent computer," which can run a graphics card to support the high demands of VR. In the case of the Vive, the graphics cards recommended by the manufacturer include the NVIDIA GeForce GTX 1060, AMD Radeon RX 480 or something equivalent. The computer's processor needs to be at least an Intel Core i5-4590 or AMD FX 8350 equivalent or better.

At Washington & Lee, the center runs HP workstations that cost about $2,000 apiece. Those are outfitted with NVIDIA's GTX 1070 cards. But Pfaff estimated that an ably-outfitted computer could be acquired for $1,500, making the total bill (headset plus computer) between $2,100 and $2,600.

A VR setup also requires space for moving around, Pfaff pointed out. The Vive is a room-scale VR system that comes with two "lighthouses," devices that are set up on opposite ends of the room (up to 16 feet apart) to track where the headset is in the space, and a pair of controllers that act as hands or some other kind of input devices. The Vive itself is tethered to the computer by a cable that's about 15 feet long, enabling the user to move around.

That's what distinguishes a high-end VR headset from the inexpensive versions, such as Google Cardboard, said Pfaff. With $15 equipment, "no matter where you move your head, the thing stays in front of you, so the whole world drags around with you." With the Vive, he noted, "you can throw something on the ground and then walk around it."

Finally, there's the software. Because the center runs Unity, the staff can use photogrammetry, enabling the team to create digital models out of physical entities (a building, an archaeological specimen or anything else) and put them into the virtual space. Or, if there's an application that the instructor wants to try, it can be purchased and downloaded and run through SteamVR from Valve, the company better known for its computer games and online gaming platform. One example in use at the university is Calcflow, a VR graphing calculator.

Pfaff admitted that he can't keep up with the commercial market of VR software, so the center made a choice early on. "We're not going to wait for the application we need to appear. We decided to go down the road of developing our own content from the beginning."

Pfaff said he learned how to use the tools, such as VRTK, by watching videos on YouTube and checking out lessons through the university's Lynda account.

Pre-Research but Post-Dabbling

Washington & Lee faculty aren't at the stage where VR research is taking place. At this point, it's really more dabbling, because there aren't "big enough sample sizes," Pfaff said. "This is the one thing that we always try to figure out. Does [the use of VR] work better than just using a piece of paper? Does it teach you something? And that's a hard question to answer." That means the findings so far are purely anecdotal. "It certainly raises student engagement. They love interacting with stuff in VR. They love working on it. They get excited about it more than they do writing a paper," he asserted. "Whether that means they've learned more, I don't know."

There's still plenty of headspace for VR's usage in education to grow. Currently, for instance, the center hasn't figured out how to get the instructor fully into the virtual world alongside the student. Pfaff's team is using a program called PUN from Photon, which integrates with Unity to help developers create multiplayer games. Now the coders can create VR worlds that multiple people can inhabit, in which they see and talk with each other, which is a start.

What the center hasn't been able to master is the ability to allow one person to manipulate an object in the virtual world and have that activity show up in the other person's view too. "If I pick up a box, it doesn't pick up reliably in the other person's environment," Pfaff explained. "It's just a glitch. If we can work that out, that would be another huge avenue for users' instruction because you could do this anywhere. It could be two people in VR systems on opposite sides of the world."

And then there's the stretch goal: mixed reality. The center owns a motion-capture system, a set of cameras placed around the room that can track reflective markers. "If you put those reflective markers onto a motion-capture suit, you can capture your body and any sort of real-world objects," Pfaff said. "What would be interesting is to mix real objects with the virtual world." As he explained, a problem with VR is that a person in a headset can walk up to a table and try to set something on it, but because the table doesn't really exist, it falls to the ground. He'd like to give his users a way to reach out and grab something virtual — a coffee cup — and feel it as if it were really there. When it was picked up, it would move. That would enable students "to reach out and grab something, such as a molecule, and touch it to get a tactile sense of it."

He insisted that the technology "is already there." It's just a matter of doing the work of finding or making the application and then hoping somebody comes along who can use it in a course. And that points to the final challenge Pfaff and the IQ Center have faced in their work related to VR piloting: getting instructors to buy into the technology. "We've been building all sorts of one-off demos, just hoping to show them off to faculty members and say, 'Hey, look at this! Would this work for your class?'"