VR, AR, and the Internet of Things: Life Beyond Second Life

A Q&A with Phil Repp

"It gets even more interesting when virtual and augmented reality meet the Internet of Things." — Phil Repp

Ball State University has been exploring virtual reality since the early days of Second Life. Here, CT talks with Vice President for Information Technology Phil Repp about how our hyper reality has changed, with more advanced virtual reality, augmented reality, the ability to work in HD, the inclusion of the IoT and datasets, and the increasing accessibility of related tools and devices.

Mary Grush: When did Ball State University start working with virtual reality and related technologies, and why was that priority for you?

Phil Repp: Our own efforts in VR began in the mid-90s and grew out of the need to have greater visualization of ideas in many of our disciplines on campus.

Grush: Hadn't there been strides in visualization in some disciplines much earlier than that?

Repp: Ways to visualize ideas has been a kind of search for a very long time, particularly in the design disciplines. You can even find it dating back to the 15th century in examples like Filippo Brunelleschi, who invented perspective: He didn't like the idea of flat drawings of his buildings, so he learned how to show dimension through perspective. And there have been stages in various disciplines over time — e.g., mathematics and the sciences — where discipline-specific visualization tools took several steps forward.

So the search for better visualization of ideas has been on for centuries, but recently technology has taken it to a whole different level. And VR can both span disciplines and offer an intuitive experience.

For us at Ball State University, when technology tools started to get more sophisticated and VR became more generally available — you remember the early days of Second Life, for example — that's when we began experimenting with the hyper reality of representing and visualizing ideas.

Soon we were using many 3D tools, virtual reality, and augmented reality to move ourselves toward the ability to represent things in a way that would be closer to what's in a person's mind's eye in sharing and communicating an idea.

Grush: Since then, you have had many virtual reality and augmented reality projects on campus. How do you coordinate all that experimentation?

Repp: You can imagine, from all that experimentation came all kinds of efforts we'd like to recognize and keep investigating. The leader for this type of work on our campus is John Fillwalk, who is the director of our Institute of Digital Intermedia Arts within our Information Technology Division. He's an academic, a media specialist, a designer, and a creative leader who has taken our work with these tools to new levels.

Grush: How does John focus this work?

Repp: What John has done is to take the idea of visualization and turn it into a hyper reality. That hyper reality can take many forms, in many disciplines and contexts across campus.

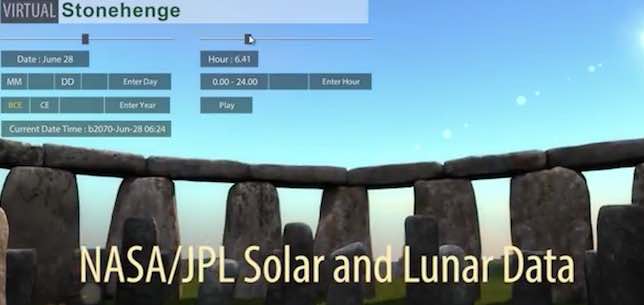

For example, he has been working with archeologists, trying to understand how celestial alignments would appear in ancient Rome, or considering how Stonehenge got its celestial alignments.

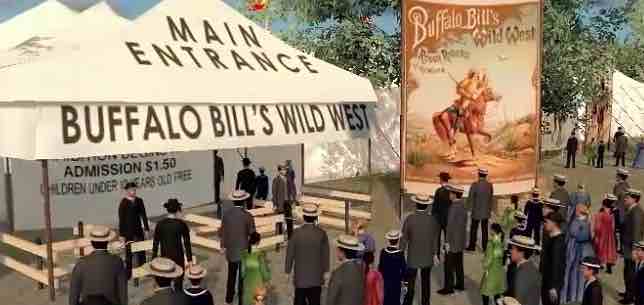

In another project he tried to offer the experience of Buffalo Bill's Wild West show and what his encampment would look like. People can take themselves back to that time. It is an attempt to express and visualize that reality and take someone closer to what it might have really been like.

Grush: So you were using VR and AR tools to be able to create a hyper reality for any given academic context?

Repp: Yes, that was our drive to do this. But it gets even more interesting when virtual and augmented reality meet the Internet of Things. Then, you have an explosion of ideas that could be used especially for the classroom. We're probing all that now — but it came out of our history with that search for visualization and how to create a hyper reality.

Grush: Given that many of Ball State's initial experiments had been with Second Life, I have to ask: Is there life beyond Second Life? How do you create it?

Repp: Yes, there is life beyond Second Life. We have evolved and come a long way since our initial involvement with Second Life. We do a lot of our own coding, but we also use some proprietary tools — in particular, tools that allow us to do high definition work.

Grush: In those early days of Second Life, I can remember my avatar flying around your campus — and it seemed like your faculty and students were really tied into your experiments with VR. How did you capture their imagination — or did they capture yours?

Repp: I think that they captured our imagination in terms of what could be done jointly, and we all took part in some way.

For example, we have two planetariums on this campus. John took over the "dome" of one and projects his hyper reality experiments there, such as the Stonehenge project. When you walk into that, you can look around for 360 degrees and feel like you are touching Stonehenge.

And people who experience projects like Virtual Stonehenge imagine and suggest other things we can do.

This type of advanced discovery that John has led, has peaked awareness and interest across the campus.

Grush: What are a few of your other experiments or projects that have received a lot of attention? Are any highly tied into the classroom?

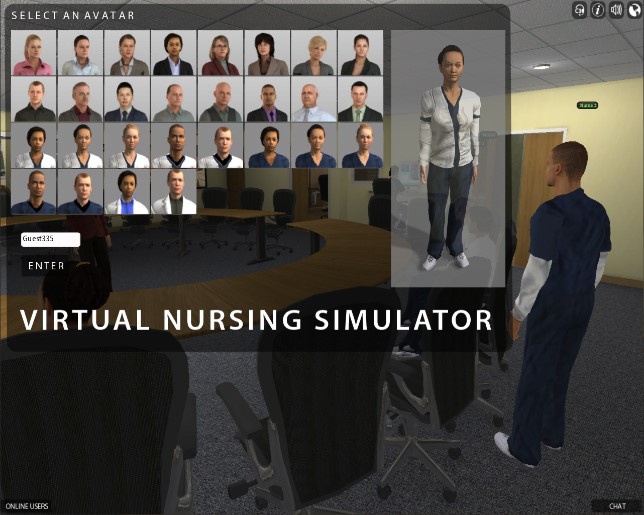

Repp: One of the things we've done that brings our experimentation closer to the classroom is a nursing simulator that we've put up as a VR environment on the Web. Students get their first experiences with taking measurements like vital signs — via the Web. We also have manikins on campus for this type of training hands-on, but using the Web environment for initial instruction is an excellent precursor to that experience. And given the fact that Ball State has such a large number of online nursing students, that project really helped make connections for those students. This is a very successful initiative.

We've also been involved with the History Channel a couple of times, looking at Hadrian's Villa in Tivoli, and exploring many parts of ancient Rome. John has worked with some archeologists at Indiana University, Carnegie Mellon, University of Virginia, and other institutions, to look how ancient cities were built. So you can explore those cities in VR, too, in some detail. We even downloaded data from JPL that allowed us to create the proper alignment of celestial bodies for any given date in that timeframe.

We've done some projects recently with Oculus Rift, that created virtual fliers for the Kitty Hawk and Apollo 15. Yes, you too can fly the Wright Brothers' plane.

Grush: Getting back to the idea of life beyond Second Life, we appreciate that Second Life was where a lot of universities got their toes wet, with VR. But things have come a long way. Could you talk a bit about that and characterize how things have changed, given the constellation of VR/AR technologies and strategies and moving ahead with new forms of hyper reality?

Repp: What I see is this hyper reality world — visualizing reality with VR/AR — coupled with this powerful invasion of data on top of that, as you add in the Internet of Things. This presents a really interesting opportunity for all of higher education to explore, creating a much greater and deeper learning experience outside the classroom.

I think when Second Life began, there was a lot of interest, but the toolset was limited — just because of the timeframe, not that the toolset wasn't a good one for that period. But, things matured. I think it was, in particular, the ability to work in HD that improved things a lot. Then came the ability to bring in datasets — creating dashboards and ways for people to access other data that they could bring into the virtual reality experiment. I think those two things were real forces for change.

A dashboard could pop up, and you could select among several tools, and you could get a feed from somewhere on the Internet — maybe a video or a presentation. And you can use these things as you move through this hyper reality: The datasets you select can be manipulated and be part of the entire experience.

So, the hyper reality experience became deeper, richer with tools and data via the IoT; and with HD it became more real.

These things have caused a lot more experimentation, and a lot more relevance to the process of learning.

Grush: I know that Ball State has made a significant investment over the years and continues to support the experiments of faculty in these areas. Given what you just described about things like dashboards, incorporating datasets, and the IoT, will other institutions be able to approach new forms of hyper reality, particularly if they might not have the budget to commit?

Repp: Institutions are, of course, committed to investigating all this at various, different levels. But all of this is becoming more accessible in terms of cost, and is therefore more commonplace, especially as we see this technology moving to mobile devices. Over time, the costs to get in have come down.

And more and more institutions are seeing the value of moving into this space. If you keep track, you'll notice a little bit of growth every day. But many of these efforts are relatively small scale at most universities. A smaller proportion of institutions, like ours, are investing in these projects at a large scale.

I think sooner, rather than later, we'll see more campuses moving into this space and trying to differentiate the experience for the individual student as well as the changing classroom experience.

Grush: You are in a unique position at Ball State, because your institution is out in the lead in the areas we've been talking about. What do you think about when you look ahead at how higher education might be impacted by these technologies?

Repp: We can't deny the fact that curriculum and the way we teach is becoming unbundled. Some things are going to happen online and in the virtual space, and other things will happen in the classroom. And the expense of education is going to drive how we operate. Virtual reality tools, augmented reality tools, and visualization tools can offer experiences that can be mass-produced and sent out to lots of students, machine to machine, at a lower cost. Virtual field trips and other kinds of virtual learning experiences will become much more commonplace in the next 5 years.

This increasing accessibility of VR and AR, along with the affordability of tools and devices, and the growth of the IoT, will allow more people to experiment and discover new ways to help people learn and understand how the world works. With all the data we now have, and all the devices that can talk machine-to-machine, plus the ability to personalize the learning experience, our approach to teaching and learning will be affected, or even truly disrupted. How will we manage all this? That's the question I keep in mind as I look down the road. I think we have some important opportunities ahead.

[Editor's note: All images courtesy Ball State University and the IDIA Lab. For more information on IDIA Lab projects, visit idialab.org/projects/.]

----------