Report: AI/ML Tool Usage Skyrockets Across Industry Sectors

A new report from cloud security specialist Zscaler finds the use of enterprise AI/ML tools across industry sectors has skyrocketed, led of course by ChatGPT, which also happens to be responsible for the most blocked.

The "Zscaler ThreatLabz 2024 AI Security Report" is based on more than 18 billion transactions from April 2023 to January 2024 in the Zscaler Zero Trust Exchange cloud-based security services offering.

The report investigates how enterprises are using AI and ML tools today while listing key trends in how enterprises are adapting to the ever-changing AI landscape and securing their AI tools.

An accompanying blog post listed key ThreatLabz AI findings:

- Explosive AI growth: Enterprise AI/ML transactions surged by 595% between April 2023 and January 2024.

- Concurrent rise in blocked AI traffic: Even as enterprise AI usage accelerates, enterprises block 18.5% of all AI transactions, a 577% increase signaling rising security concerns.

- Primary industries driving AI traffic: Manufacturing accounts for 21% of all AI transactions in the Zscaler security cloud, followed by Finance and Insurance (20%) and Services (17%). Education represents just 1.7% of AI transactions.

- Global AI adoption: the top five countries generating the most enterprise AI transactions are the US, India, the UK, Australia, and Japan.

- A new AI threat landscape: AI is empowering threat actors in unprecedented ways, including for AI-driven phishing campaigns, deepfakes and social engineering attacks, polymorphic ransomware, enterprise attack surface discovery, exploit generation, and more.

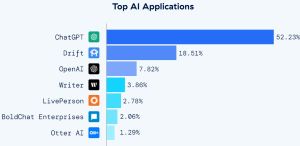

As mentioned above, another highlight revealed the most popular applications for enterprises as revealed by collected transaction data, listing:

- ChatGPT: You should already know all about this famous chatbot that started the whole gen AI craze, officially described as "a free-to-use AI system. Use it for engaging conversations, gain insights, automate tasks, and witness the future of AI, all in one place."

- Drift: Conversational AI.

- OpenAI: The company that created ChatGPT, as the Zscaler Zero Trust Exchange tracks ChatGPT transactions independently from other OpenAI transactions at large, perhaps including API calls.

- Writer: A full-stack generative AI platform.

- LivePerson: A conversational AI platform for business.

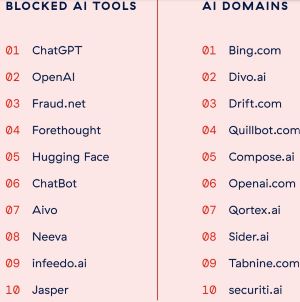

Perhaps surprisingly, ChatGPT and OpenAI are among the top three tools that are blocked the most, along with fraud detection platform Fraud.net.

[Click on image for larger view.] Top AI Applications (source: Zscaler).

[Click on image for larger view.] Top AI Applications (source: Zscaler).

The blocking of AI transactions has increased along with the usage of AI transactions, as could probably be expected. So countering that 595% uptick in enterprise AI/ML transactions is a 577% increase in blocked transactions, amounting to 18% of all transactions.

[Click on image for larger view.] Top Blocked AI Transactions (source: Zscaler).

[Click on image for larger view.] Top Blocked AI Transactions (source: Zscaler).

"This indicates that despite — or even because of — the popularity of these tools, enterprises are working actively to secure their use against data loss and privacy concerns," Zscaler said.

Another chart shows adds the top blocked AI domains.

[Click on image for larger view.] Top Blocked AI Tools and Domains (source: Zscaler).

[Click on image for larger view.] Top Blocked AI Tools and Domains (source: Zscaler).

Zscaler shared four best practices for integrating AI tools into the enterprise:

- Continually assess and mitigate the risks that come with AI-powered tools to protect intellectual property, personal data, and customer information.

- Ensure that the use of AI tools complies with relevant laws and ethical standards, including data protection regulations and privacy laws.

- Establish clear accountability for AI tool development and deployment, including defined roles and responsibilities for overseeing AI projects.

- Maintain transparency when using AI tools — justify their use and communicate their purpose clearly to stakeholders.

The company offered the following takeaway for education institutions when it comes to AI risk:

"In education, data privacy concerns will likely grow as the sector continues to embrace AI tools, specifically surrounding protections afforded to students' personal data. In all likelihood, the education sector will increasingly adopt technological means to block selective AI applications, while providing greater data protection measures for personal data."

The full report can be downloaded from the Zscaler site here (registration required).