Linux Foundation Launches New Open Source AI & Data Platform to Democratize Gen AI Tech

In an effort to "democratize" AI tech, the Linux Foundation has launched a new open source AI/data platform in partnership with Intel, Anyscale, Cloudera, Datastax, Domino Data Lab, Hugging Face and other industry players.

The Open Platform for Enterprise AI (OPEA) is a "Sandbox Project" from the organization's LF AI & Data arm, an umbrella foundation that supports open source innovation in AI and data. The goal is to facilitate and enable the development of flexible, scalable generative AI (Gen AI) systems that harness the best open source innovations from across the ecosystem.

The OPEA aims to address fragmentation in the swiftly evolving Gen AI space by collaborating with the industry to standardize components, including frameworks, architecture blueprints and reference solutions that showcase performance, interoperability, trustworthiness and enterprise-grade readiness.

Specifically, the OPEA platform includes:

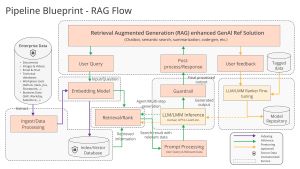

- Detailed framework of composable building blocks for state-of-the-art generative AI systems including LLMs, data stores, and prompt engines

- Architectural blueprints of retrieval-augmented generative AI component stack structure and end-to-end workflows

[Click on image for larger view.] Addressing RAG (source: LF AI & Data Foundation).

[Click on image for larger view.] Addressing RAG (source: LF AI & Data Foundation).

- A four-step assessment for grading generative AI systems around performance, features, trustworthiness and enterprise-grade readiness

AI democratization was mentioned by multiple partners, including:

- Red Hat: "As gen AI continues to advance, open source is playing a critical role in the standardization and democratization of models, frameworks, platforms and the tools needed to help enterprises realize value from AI."

- Hugging Face: "Hugging Face's mission is to democratize good machine learning and maximize its positive impact across industries and society. By joining OPEA's open source consortium to accelerate Generative AI value to enterprise, we will be able to continue advancing open models and simplify Gen AI adoption."

Democratization of AI has been a continuing theme since heavy industry investments have steered many AI efforts away from research and development and toward commercial, money-making applications (see "Cloud Giants Sign On to 'Democratizing the Future of AI R&D'").

"OPEA is emerging at a crucial juncture when Gen AI projects, particularly those utilizing RAG [Retrieval-Augmented Generation], are becoming increasingly popular for their capacity to unlock significant value from existing data repositories," said LF AI & Data Foundation in an April 16 blog post.

"The swift advancement in Gen AI technology, however, has led to a fragmentation of tools, techniques, and solutions. OPEA intends to address this issue by collaborating with the industry to standardize components, including frameworks, architecture blueprints and reference solutions that showcase performance, interoperability, trustworthiness and enterprise-grade readiness," the foundation said.

About the Author

David Ramel is an editor and writer at Converge 360.