What Does Privacy 'Look' Like? Carnegie Mellon Project Seeks Drawings

- By Dian Schaffhauser

- 01/22/15

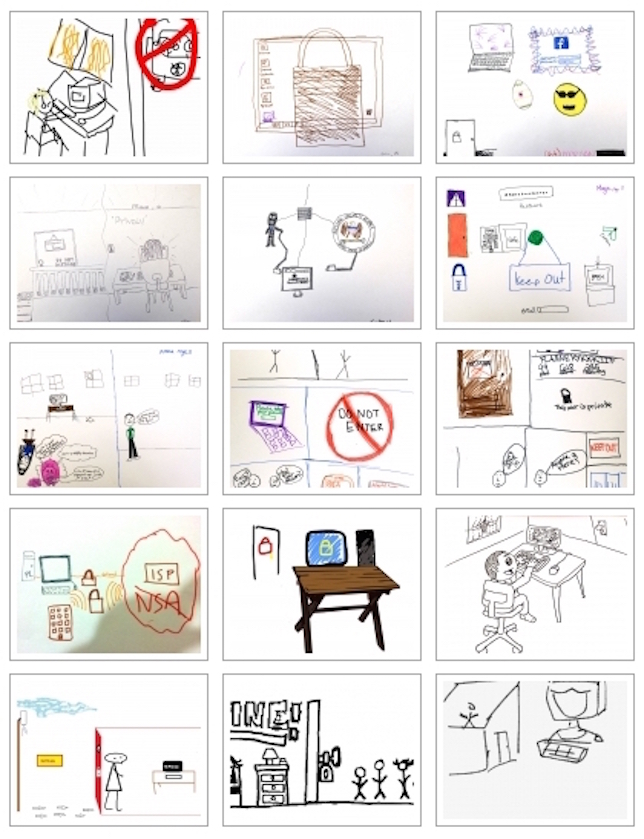

To a kindergartener privacy looks like what Spiderman needs to put his costume on. To somebody in her 60s, it's a picture of somebody walking the dog in the countryside "away from everyone" to have time for "thinking and reflecting." These are the kinds of drawings Carnegie Mellon is receiving through its invitation to people to draw what privacy means to them. So far, the Pittsburgh university has amassed "hundreds of drawings" from participants aged five to 91.

To a kindergartener privacy looks like what Spiderman needs to put his costume on. To somebody in her 60s, it's a picture of somebody walking the dog in the countryside "away from everyone" to have time for "thinking and reflecting." These are the kinds of drawings Carnegie Mellon is receiving through its invitation to people to draw what privacy means to them. So far, the Pittsburgh university has amassed "hundreds of drawings" from participants aged five to 91.

The project, Privacy Illustrated, is being led by Lorri Cranor, a professor in the department of Computer Science and director of the CyLab Usable Privacy and Security Lab.

Cranor's project was sparked at the university's Studio for Creative Inquiry. There, a group of artists, doctoral students and others worked together on Deep Lab, a "congress of cyberfeminist researchers" who looked at topics of privacy, security, surveillance, anonymity and large-scale data aggregation. In five days the group produced a 240-page book, available as a free download or as a $57 print-on-demand volume. "Privacy Illustrated" was one of the chapters, which offered commentary, samples of drawings and excerpts from privacy policies for popular sites.

Cranor and others visited the Carnegie Mellon Children's School and Pittsburgh Public School classrooms to collect drawings from 75 students in four grades. For the adult drawings, her team initially crowdsourced the invitation by hiring people through Amazon Mechanical Turk to produce pictures at $1 apiece.

"It's a fascinating view into what people think about privacy," said Cranor. "With the little kids, you can see doors, bedrooms and pulling the blankets over their heads." Teens and adults show more concern about government surveillance and overexposure on social networks.

The subject of privacy will be further explored during the institution's upcoming "Privacy Day," taking place Jan. 28. Events will include a "privacy clinic" to teach people how to protect their privacy and a talk by Julie Brill, a commissioner on the Federal Trade Commission, on privacy, security and fairness in the Internet of things.

The invitation to submit privacy drawings is still open; people can upload their pictures to the project site here.

About the Author

Dian Schaffhauser is a former senior contributing editor for 1105 Media's education publications THE Journal, Campus Technology and Spaces4Learning.