Global IT leaders are placing bigger bets on generative artificial intelligence than cybersecurity in 2025, according to new research by Amazon Web Services (AWS).

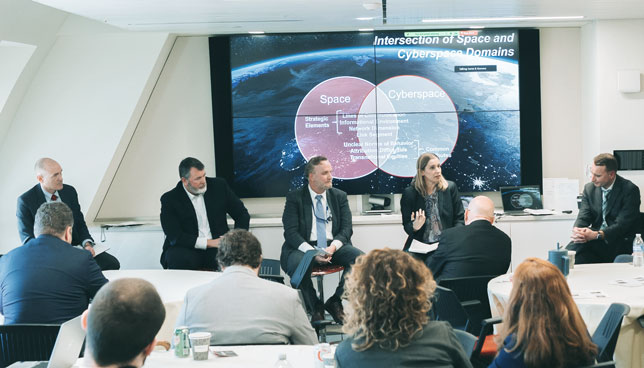

CT asked Scott Shackelford, Indiana University professor of law and director of the Ostrom Workshop Program on Cybersecurity and Internet Governance, about the possible emergence of space cybersecurity as a separate field that would support changing practices and foster future space cybersecurity leaders.

A new threat landscape report points to new cloud vulnerabilities. According to the 2025 Global Threat Landscape Report from Fortinet, while misconfigured cloud storage buckets were once a prime vector for cybersecurity exploits, other cloud missteps are gaining focus.

As organizations race to integrate AI agents into their cloud operations and business workflows, they face a crucial reality: while enthusiasm is high, major adoption barriers remain, according to a new Cloudera report. Chief among them is the challenge of safeguarding sensitive data.

Microsoft has announced major security advancements across its product portfolio and practices. The work is part of its Secure Future Initiative (SFI), a multiyear cybersecurity transformation the company calls the largest engineering project in company history.

A new report from Ontinue's Cyber Defense Center has identified a complex, multi-stage cyber attack that leveraged social engineering, remote access tools, and signed binaries to infiltrate and persist within a target network.

Microsoft has announced a major expansion of its AI-powered cybersecurity platform, introducing a suite of autonomous agents to help organizations counter rising threats and manage the growing complexity of cloud and AI security.

Google has announced it will acquire cloud security startup Wiz. If completed, the acquisition — an all-cash deal valued at $32 billion — would mark the largest in Google's history.

Apple mobile device management company Jamf has announced the intent to acquire Identity Automation, a provider of identity and access management (IAM) solutions for K-12 and higher education.

AI-powered data security company Cohesity has expanded its collaboration with Red Hat to enhance data protection and cyber resilience for Red Hat OpenShift Virtualization workloads.