Carnegie Mellon Researchers Learn 3D Image Tricks

- By Dian Schaffhauser

- 09/02/14

Researchers at Carnegie Mellon University have created a new photo editing system that allows users to take objects in 2D images and turn them into 3D models that can be turned over and flipped around, exposing sides that are not part of the original image. The secret is to match the object to be manipulated with a comparable 3D object publicly available online and doing a bit of "semi-automated" matching of the photo's pixels to generate the right geometrical shape and automated matching for colors, texture and lighting.

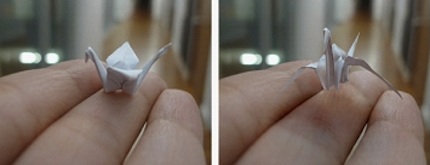

As shown in a demonstration video, a yellow taxi stuck in traffic suddenly sprouts the ability to rise up in the air, flip upside down (exposing its underside) and fly out of the scene. Or a photo of a tiny paper crane sitting on a hand revolves around then flies away.

"In the real world, we're used to handling objects — lifting them, turning them around or knocking them over," said Natasha Kholgade, a Ph.D. student in the Robotics Institute and lead author of the study. "We've created an environment that gives you that same freedom when editing a photo."

A 3-D photo editing system developed at Carnegie Mellon makes it possible to take a photo of an origami crane, left, and turn it to reveal surfaces hidden from the camera, while maintaining a realistic appearance, as seen at right. The object's shape can be changed, here enabling an animation showing the crane flapping its wings. Source: Carnegie Mellon University |

A couple of challenges to the work are that online 3D models don't always exist for every object in a photo, and even when it does, the object isn't always findable online. So it makes sense that this research was sponsored, at least in part, by a Google Research Award.

Kholgade shared "3D Object Manipulation in a Single Photograph Using Stock 3D Models" at the SIGGRAPH 2014 Conference on Computer Graphics and Interactive Techniques in Vancouver, Canada in August.

About the Author

Dian Schaffhauser is a former senior contributing editor for 1105 Media's education publications THE Journal, Campus Technology and Spaces4Learning.