Distance Learning Programs Make Case for Quality Assessment

- By Dian Schaffhauser

- 10/25/17

The Dallas County Community College District had grown its online learning programs organically for two decades when Terry Di Paolo, executive dean of online instructional services, decided it was time to take a "holistic view" of the programs to assess quality and create an improvement plan that aligned with its accreditation work. Attendance in 2015 at a Southern Association of Colleges and Schools Commission on Colleges (SACS) workshop introduced him to the Online Learning Consortium's Quality Scorecard. Other institutions at that event assured him that he could use the scorecard system across all seven colleges and various service centers that made up the district.

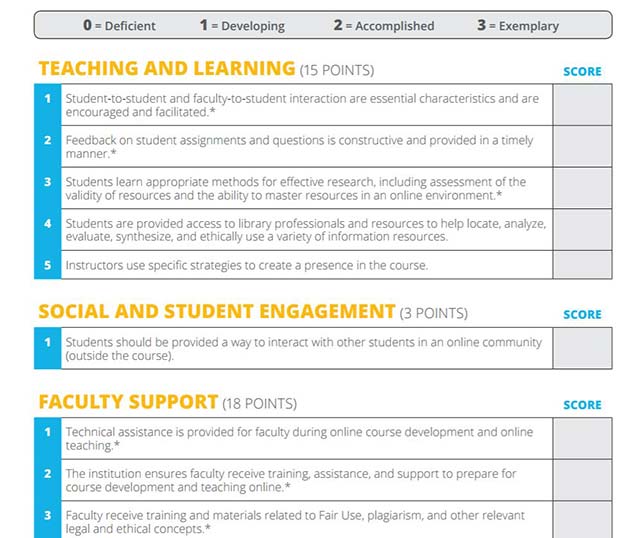

OLC's Quality Scorecards are worksheets that gives schools criteria and benchmarking tools to assess the effectiveness of their online instructional efforts. Broad assessment areas cover administration of online programs; blended learning; quality course teaching and instructional practices; digital courseware instructional practice; and course design, the last a rubric developed and shared by the State University of New York. Each document covers multiple details; for example, the "administration of online programs" rubric has 75 criteria, which are scored on four levels: deficient (zero points), developing (one point), accomplished (two points) and exemplary (three points).

In the case of the Texas college system, over a 14-month period starting in February 2016, the district adopted the OLC Quality Scorecard internally, customizing it to the unique needs of its large and diverse institution, applying it to the district overall and to its individual colleges.

During that project the district realized that while individual colleges could evaluate their own efforts against the scorecard criteria, there was no visibility to the district's overall approach for the items being measured. Districts were coming up with their own standards for the various aspects of online instruction and learning. Di Paolo and his small team of reviewers received all the college scores and converted them into a single score for each item. He also asked each college to provide five ideas for "quick fixes" and five for "long-term improvements" in each category.

In a new case study published on the OLC website, Di Paolo explained that "everything we created we gave back to the college teams with instructions to share." As the district moved through the process, he added, "you really saw a commitment to what we were doing. You could tell this was having an impact — I started to get requests to provide overviews to others in the district who were not directly involved with the scorecard."

By April 2017 the college system had gathered its top administrators in a room to report the results. During this session, participants engaged in conversations with the college teams about where the district's online programs were and where they should go next. As an institution, the case study noted, they realized "they needed to make some changes." Among them was a need to improve how the colleges mapped distance education policies to SACS requirements.

For the first time, this case study along with those for two other institutions are being published on the OLC website over the next couple of weeks. The idea, according to OLC is to help other schools find out what others have learned about evaluating the quality of their online learning programs. For example, a profile of Baker College's Baker Online will explain how the school used the quality scorecard to help prioritize improvements. The case study for Middle Tennessee State University laid out how the institution used its evaluation to pinpoint areas that needed improvement in its online offerings and demonstrate deficiencies to gain support for the changes.

All three case studies will be available on the OLC Quality Scorecard Suite homepage.

About the Author

Dian Schaffhauser is a former senior contributing editor for 1105 Media's education publications THE Journal, Campus Technology and Spaces4Learning.