Report: Agentic AI Protocol Is Vulnerable to Cyber Attacks

A new report has identified significant security vulnerabilities in the Model Context Protocol (MCP), technology introduced by Anthropic in November 2024 to facilitate communication between AI agents and external tools.

MCP technology has gained industry traction as a way to standardize how AI agents interact and share context, which is crucial for building more sophisticated and collaborative AI systems within enterprises. With that traction, however, has come attention from threat actors. The recent report by Backslash Security highlights two major flaws — dubbed "NeighborJack" and OS injection vulnerabilities — that compromise the integrity of MCP servers, potentially allowing unauthorized access and control over host systems.

"MCP NeighborJack" was the most common weakness Backlash discovered, with hundreds of cases found among the over 7,000 publicly accessible MCP servers it analyzed. The core problem is that these vulnerable MCP servers were explicitly bound to all network interfaces (0.0.0.0), making them "accessible to anyone on the same local network." This misconfiguration essentially exposes the MCP server to potential attackers within the local network, creating a significant point of entry for exploitation.

The second major category of vulnerability identified was "Excessive Permissions & OS Injection." Dozens of MCP servers were found to permit "arbitrary command execution on the host machine." This critical flaw can arise from various coding practices, such as "careless use of a subprocess, a lack of input sanitization, or security bugs like path traversal."

The real-world risk is severe. "The MCP server can access the host that runs the MCP and potentially allow a remote user to control your operating system," Backlash said in a blog post. This means an attacker could gain full control of the underlying machine hosting the MCP server. Backslash's research observed several MCP servers that tragically contained both the "NeighborJack" vulnerability and excessive permissions, creating "a critical toxic combination."

In such cases, "anyone on the same network can take full control of the host machine running the server," enabling malicious actors to "run any command, scrape memory, or impersonate tools used by AI agents."

MCP Server Security Hub

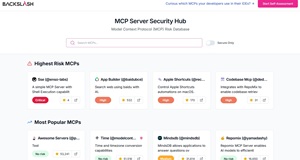

To directly address the identified vulnerabilities and the new attack surface presented by MCP servers, Backslash has established the MCP Server Security Hub, which among other things lists the highest-risk MCPs.

[Click on image for larger view.] MCP Server Security Hub (source: Backslash Security).

[Click on image for larger view.] MCP Server Security Hub (source: Backslash Security).

This platform is the first publicly searchable security database dedicated to MCP servers, the company said. It provides a live, dynamically maintained, and searchable central database containing over 7,000 MCP server entries, with new entries added daily. The Hub's primary function is to score publicly available MCP servers based on their risk posture. Each entry offers detailed information on the security risks associated with a given MCP server, including malicious patterns, code weaknesses, detectable attack vectors, and information about the MCP server's origin. Backslash encourages anyone considering using an MCP server to first check it on the Hub to ensure its safety.

Recommendations

Unsurprisingly, Backslash Security's list of recommendations regarding the threat to MCP servers starts with utilizing the MCP Server Security Hub. Other advice includes:

-

Use the Vibe Coding Environment Self-Assessment Tool. To gain visibility into the vibe coding tools used by developers and continuously assess the risk posed by LLM models, MCP servers, and IDE AI rules, Backslash has launched a free self-assessment tool for vibe coding environments.

-

Validate Data Source for LLM Agents. It is recommended to validate the source of the data that your LLM agent is receiving to prevent potential data source poisoning.

For more information, visit the Backslash Security blog.

About the Author

David Ramel is an editor and writer at Converge 360.